Visual Reference of Ambiguous Objects for Augmented Reality-Powered Human-Robot Communication in a Shared Workspace

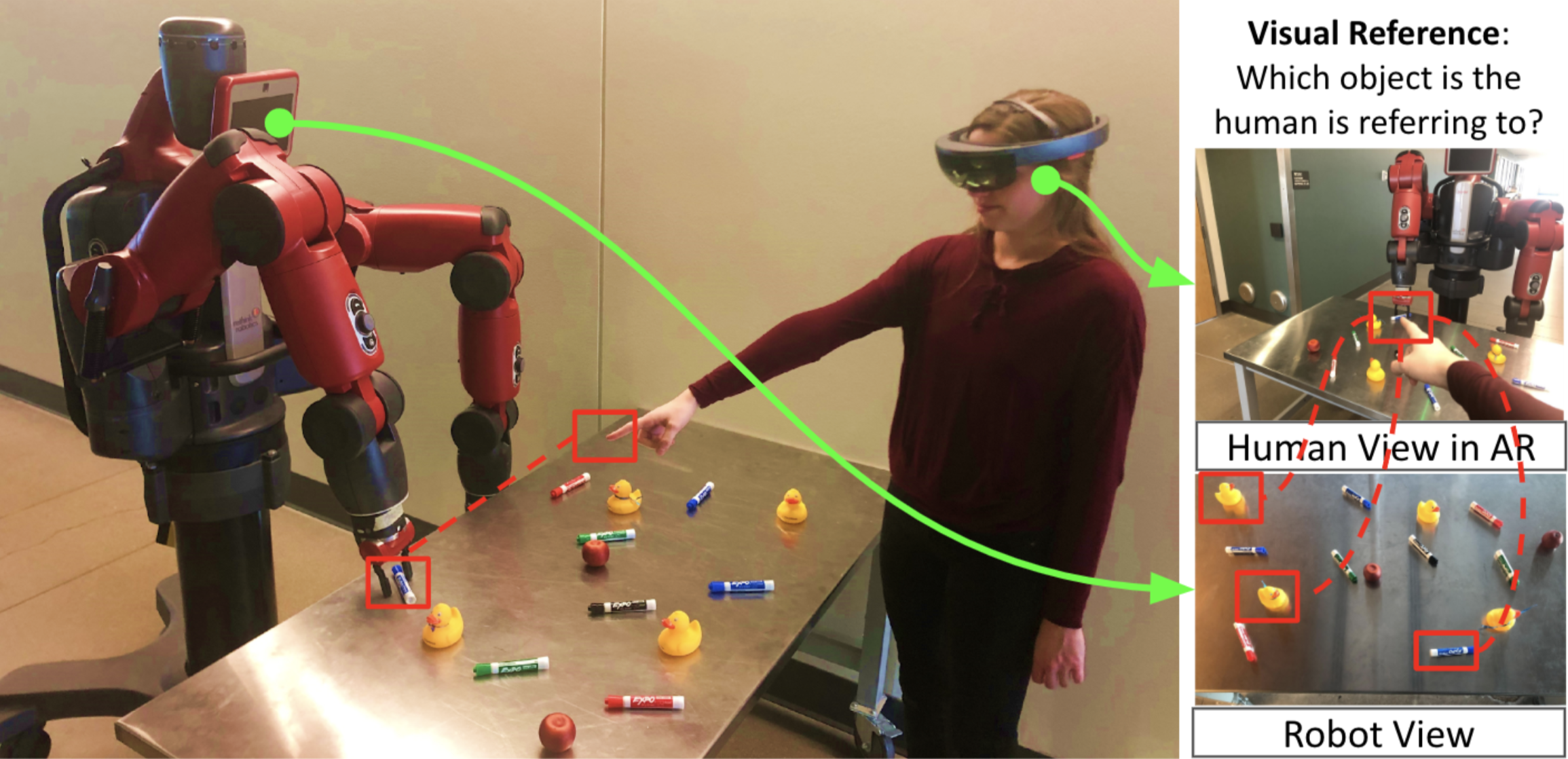

In shared workspaces, teammates working with a common set of objects must be able to unambiguously reference individual objects in order to effectively collaborate. When teammates are autonomous robots, human teammates must be able to communicate their intended reference object without overtly interfering with their workflow. In human-robot interaction, the problem of visual reference is defined as identifying the specific object referred to by a human (e.g., through a pointing gesture recognized by an augmented reality device), and relating this object to the associated object in the robotic teammate’s field of view, thereby identifying the intended object from a set of ambiguous objects. As human and robot teammates typically observe their shared workspace from differing perspectives, achieving visual reference of objects is a challenging yet crucial problem. In this paper, we present a novel approach to visual reference of ambiguous objects that introduces a graph matching-based approach which fuses visual and spatial information of the objects in a shared workspace through augmented reality-powered human-robot communication. Our approach represents the objects in a scene with a graph where edges encoding the spatial relationships among objects and attribute vectors describing each object’s appearance associated with each node. Then, we formulate visual object reference for human-robot communication in a shared workspace as an optimization-based graph matching problem, which identifies the correspondence of nodes in graphs built from the human and robot teammates’ observations. We conduct extensive experimental evaluation on two introduced datasets, showing that our approach is able to obtain accurate visual references of ambiguous objects and outperforms existing visual reference methods.

Citation: Visual Reference of Ambiguous Objects for Augmented Reality-Powered Human-Robot Communication in a Shared Workspace. Peng Gao, Brian Reily, Savannah Paul, and Hao Zhang. International Conference on Virtual, Augmented, and Mixed Reality (VAMR), 2020.