Enabling Simultaneous View and Feature Selection for Collaborative Multi-Robot Perception (Under Review by IJARS)

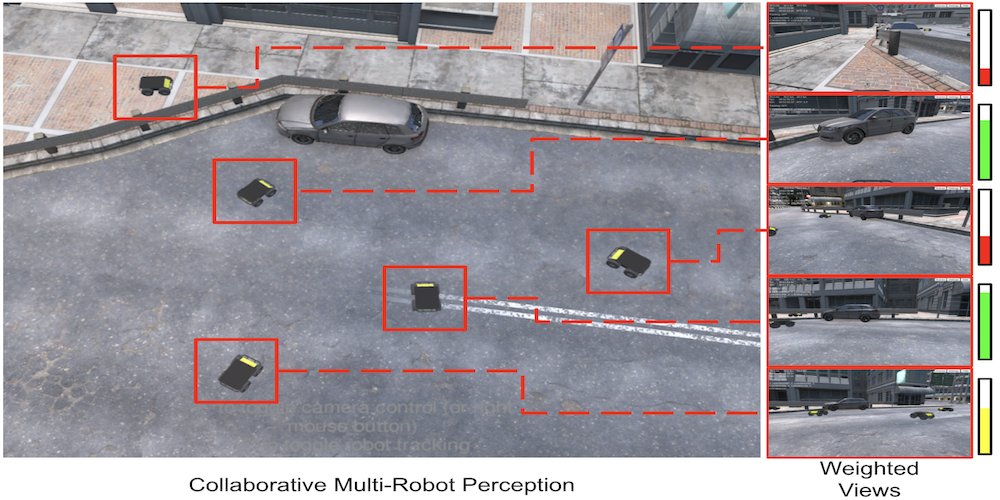

Collaborative multi-robot perception provides multiple views of an environment, offering varying perspectives to collaboratively understand the environment even when individual robots have poor points of view or when occlusions are caused by obstacles. These multiple observations must be intelligently fused for accurate recognition, and relevant observations need to be selected in order to allow unnecessary robots to continue on to observe other targets. This research problem has not been well studied in the literature yet. In this paper, we propose a novel approach to collaborative multi-robot perception that simultaneously integrates view selection, feature selection, and object recognition into a unified regularized optimization formulation, which uses sparsity-inducing norms to identify the robots with the most representative views and the modalities with the most discriminative features. As our optimization formulation is hard to solve due to the introduced non-smooth norms, we implement a new iterative optimization algorithm, which is guaranteed to converge to the optimal solution. We evaluate our approach through a case-study in simulation and on a physical multi-robot system. Experimental results demonstrate that our approach enables effective collaborative perception through accurate object recognition and effective view and feature selection.

Citation: Enabling Simultaneous View and Feature Selection for Collaborative Multi-Robot Perception. Brian Reily and Hao Zhang. International Journal of Advanced Robotic Systems (IJARS), Submitted 2021.